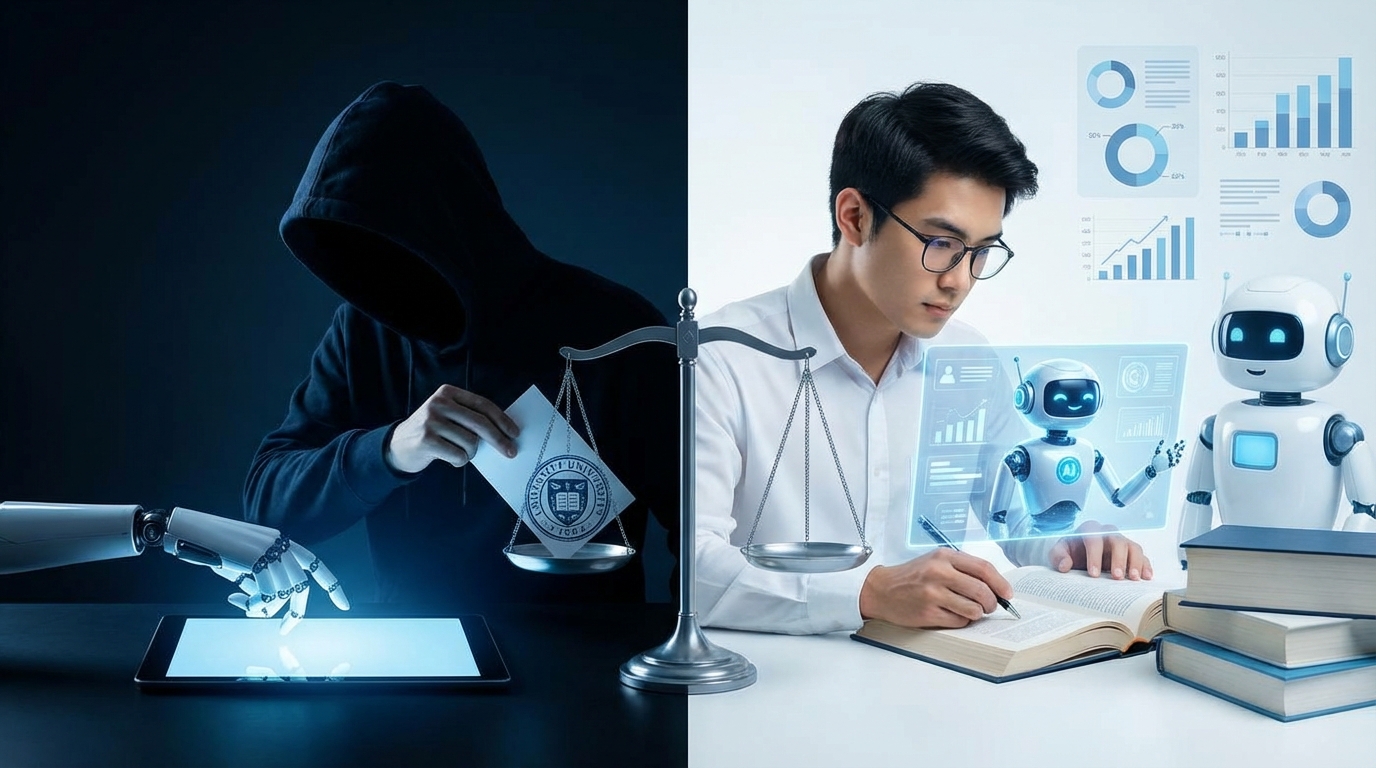

Last semester I watched two people in the same library row use ChatGPT in completely opposite ways. One was pasting the assignment prompt and asking for a full essay. The other was iterating on a research question, testing code, and comparing sources. Same tool. Totally different game.

Here is the TL;DR: AI is both a cheating tool and a research assistant. The difference is not in the model, it is in what you ask it to do, how transparent you are about using it, and whether it replaces your thinking or amplifies it. If you outsource your brain, it is cheating. If you use it to explore ideas, debug, summarize, and challenge your reasoning, it is closer to a calculator for thought.

What counts as “cheating” with AI?

I realised during a lecture that half the confusion around AI and cheating comes from everyone using the word “cheating” but meaning totally different things. Some people mean copying. Some mean skipping the hard part. Some just mean “I do not like this new thing.”

To get anywhere useful, we need a clearer split between:

- Academic dishonesty: Breaking the rules of your course or university.

- Intellectual laziness: Doing the minimum mental work while staying technically inside the rules.

- Tool-assisted learning: Using tools to think more, not less.

The first one is about ethics and policy. The other two are about how much you grow.

AI cheating is not “using AI.” AI cheating is using AI to pretend that the AI’s work is your original thinking and learning.

That means the same action can be fine in one context and totally wrong in another. Example: using AI to write code in a hackathon that allows tools is fine. Doing the same thing in a closed-book, no-tools exam is cheating.

A simple test that helps:

| Question | If answer is “yes” | If answer is “no” |

|---|---|---|

| Am I allowed to use AI for this task under the course rules? | Keep going to the next question. | You are in cheating territory. |

| Could I explain my process and AI usage honestly to my professor without hiding anything? | Probably acceptable. | You are hiding something. That is a warning sign. |

| If I lost AI access right now, could I still perform this task at a basic level? | You are building skills. | You are outsourcing your competence. |

If your answer to the second or third question is “no,” the tool is starting to replace your learning.

Ways AI is clearly a cheating tool

When people panic about AI, these are the uses they picture. Some are obvious, some are more subtle.

1. Generating full assignments and submitting them as your own

This is the classic “Write me a 2000-word essay on…” request. Paste prompt. Copy essay. Turn in.

Problems:

- It breaks course rules in nearly every university policy that exists right now.

- It bypasses the skill the assignment is testing: argument building, structure, citation, clarity.

- It often sounds generic and can be caught by a human who reads a lot of student work.

If AI writes the whole assignment and you just send it, you are not “getting help.” You are signing someone else’s work with your name.

If you want to use AI in writing-heavy courses without cheating, the line is: AI can be your thinking partner, not your ghostwriter.

2. AI during no-tools exams and quizzes

There is not much gray area here. If your professor says “no external tools” and you secretly use ChatGPT, Claude, Gemini, or any other model, that is straightforward exam cheating.

Examples:

- Taking a multiple-choice quiz on your laptop while running an AI tab on the side.

- Using your phone in the bathroom during an exam to ask AI for solutions.

- Feeding screenshots of exam questions to an AI model.

People sometimes argue, “But the questions are basic, I could have done them anyway.” Then why risk academic misconduct for a few extra minutes of comfort?

3. AI-generated code without understanding it

Coding assignments have a special AI problem: models can spit out seemingly working code fast. Paste the prompt, get a function, pass the tests.

Here is the problem: if you cannot explain each part of the code, but you present it as your own, you are misrepresenting your competence.

Concrete risks:

- Professors asking you in a lab or oral exam to modify your code and you cannot.

- Group projects where your teammates rely on your “skills” and that affects them too.

- Internships where your CV says “built X,” but in reality, an AI did and you cannot replicate it.

If AI wrote the code and you cannot debug it without AI, that line on your CV is more fiction than experience.

If a course allows AI, a safer pattern is: ask AI for help, then rewrite and annotate the solution in your own style, with clear comments and understanding.

4. Hiding AI usage when the rules require disclosure

Some courses now allow AI but ask you to:

- Describe how you used it in a short note.

- Mark sections where AI contributed.

- Attach your prompt history.

If you use AI but lie or omit that in your disclosure, you have moved into academic dishonesty, even if using AI itself was allowed.

This is where transparency matters more than the tool itself.

Ways AI can be a legitimate research assistant

Now for the other side. I realised during a group project that refusing to touch AI “out of principle” was actually hurting us more than helping. The teams that used it thoughtfully moved faster and had more space to think.

Here are uses that fit more in the “assistant” category, especially when the course or lab allows them.

1. Clarifying concepts when textbooks or slides are confusing

You know that moment when you have read the same paragraph in a paper five times and your brain still refuses to parse it? AI is good at reframing.

Valid use cases:

- Asking for plain-language explanations of a theorem, concept, or mechanism.

- Requesting step-by-step walkthroughs of derivations you already tried yourself.

- Getting analogies or simplified scenarios, then checking them against your notes.

The mental rule here: AI should shine a light on material you already engaged with, not be the first and only place you see it.

A stronger pattern looks like this:

1. Read the lecture notes or textbook section.

2. Write down what you think it means.

3. Ask AI: “Here is my understanding. Where is it wrong or incomplete?”

That way you are not replacing your thinking, you are stress-testing it.

2. Brainstorming research questions and angles

Coming up with a decent research question often takes more time than writing the actual paper. AI can help break out of the “blank page” phase.

Practical prompts:

- “I am interested in AI fairness in campus grading systems. Suggest 10 possible research questions that someone could study with survey data.”

- “Here are three papers I read. Summarize the gaps they mention and propose follow-up questions at an undergraduate level.”

Treat the output as rough clay, not final sculpture. Some suggestions will be too broad, trivial, or already overstudied. The skill is in filtering.

3. Literature triage, not literature review replacement

There is a tempting but risky shortcut: “Give me the related work and citations for X.” Models will often hallucinate papers, authors, and journals. That is not a minor glitch; it can ruin your credibility.

Safer patterns:

- Ask AI for keywords and search terms to use in Google Scholar, JSTOR, or arXiv.

- Paste abstracts from real papers and ask for comparisons or summaries.

- Use AI to group papers you already have by theme, method, or result.

Any reference that comes out of AI should be treated as a lead to verify, not as a source you can cite directly.

You earn real academic skill by:

- Checking every citation inside a proper database.

- Reading at least the introduction and conclusion yourself.

- Understanding methods enough to judge whether the paper actually supports your argument.

AI can help with the logistical overhead, but you still need to do the intellectual heavy lifting.

4. Drafting, editing, and polishing your writing

There is a spectrum here:

| Use | Low risk | Medium risk | High risk |

|---|---|---|---|

| Grammar and clarity edits | Fixing typos, awkward phrasing, tense issues. | Rewriting sentences for style while keeping your structure. | Turning bullet points into full paragraphs without your input. |

| Structural help | Asking for an outline based on your thesis. | Reordering your paragraphs for better flow. | Generating the entire outline from a vague topic. |

| Content creation | Suggesting examples after you ask for them. | Filling gaps you identified in your draft. | Writing whole sections you have not thought through. |

The safest area is grammar and micro-clarity. Most universities treat that closer to using a spelling checker, as long as the ideas are yours.

The risk grows as AI controls more of:

- The structure of your argument.

- The examples chosen.

- The actual sentences on the page.

If your syllabus allows AI editing, a transparent pattern is:

“I drafted this essay myself. Then I used AI to highlight unclear sentences and suggest alternative wording. I accepted, rejected, or modified those suggestions manually.”

That way, the professor knows the core thinking is yours.

5. Coding partner, not coding replacement

Modern coding models are surprisingly capable. But they are most helpful when you treat them like a strong TA, not a vending machine.

Good patterns:

- Ask for explanations of error messages that you already tried to debug.

- Use AI to compare two different algorithmic approaches you know about.

- Request tests or edge cases for functions you wrote.

- Ask for performance or readability improvements, then study the changes.

Question to keep asking yourself:

“Could I rebuild a simpler version of this code from scratch without AI if I had to?”

If the honest answer is “no,” then you are collecting snippets, not building skill.

How professors and universities are starting to treat AI

During a seminar, my professor paused and said: “I do not care if you use AI. I care whether you can think without it.” That summed up most of the emerging policies I have seen.

Here are the main patterns on campuses right now.

1. Explicit AI-allowed / AI-banned labels per assignment

More courses are now tagging assignments with clear AI rules:

- AI banned: Exams, quizzes, in-class essays, sometimes short reflections.

- AI limited: OK for grammar or brainstorming but not for content generation.

- AI allowed with disclosure: Projects, essays, some labs.

- AI encouraged: Courses about machine learning, data science, or tech policy.

Your responsibility is to read these carefully and treat them like any other academic integrity rule.

2. Shifting assessment design to be harder to fake

Instead of “Write a generic essay about X,” you are seeing more:

- Assignments tied to local context (your campus, your city, your internship).

- Process-focused grading: drafts, outlines, and reflection logs.

- Oral defenses: short interviews where you explain your solution.

- In-class components: building part of the work without devices.

AI pushes assessment away from pure output and toward process and understanding. That actually benefits students who genuinely know their material.

3. AI detection tools and their limits

There are tools that claim to detect AI-generated text. Their accuracy is mixed, especially on short texts and on writing from multilingual students. False positives can be serious, and many universities now treat them as one signal, not proof.

What this means for you:

- Do not rely on “detection confusion” as a safety net. Policies usually treat intent and dishonesty very seriously.

- If you use AI ethically, keep your drafts and AI chat logs as evidence of your process.

- If a professor raises concerns, being able to show your version history can protect you.

The most reliable “detector” is still a professor who has read your writing all semester and knows your voice.

The personal trade-off: grades now vs skills later

This is the part students do not always want to talk about. Even if AI shortcuts were 100% undetectable, there is a long-term cost.

I caught myself almost using AI to write a stats reflection that I could have done on my own. The honest reason was “I am tired.” That is understandable. But magnified across semesters, it changes who you become.

1. Skill debt is real

Think of skipping practice with AI like taking out a loan:

- You “save” time now by not learning that derivation, coding pattern, or writing structure.

- You “pay” later when internships, interviews, or real projects require those skills.

- The “interest” is the extra stress and catch-up you face when you realize the gap.

AI that writes for you can create a private gap between your grades and your actual ability. That gap feels fine until you hit a context where tools are limited or where you must improvise in real time.

2. Reputation and trust

In group projects and research labs, reputation spreads quietly:

- If you rely on AI too much, teammates notice when you cannot adapt the work.

- Supervisors learn who can handle complexity and who only functions with a tool.

- Those impressions influence references, opportunities, and who gets invited back.

The upside is also real: if you use AI to go deeper and faster, people notice that too. No one minds you using tools when you clearly understand the underlying work.

3. Internal compass: are you okay with how you are learning?

Forget policies for a second. Ask yourself:

“If everyone in my field learned the way I am learning right now, would I trust them as doctors, engineers, teachers, or researchers?”

If your answer is “no,” that is a signal your AI habits are out of balance.

Practical rules for using AI as a research assistant, not a cheating tool

Here is a concrete set of guardrails that has worked well for me and for other students trying to keep their integrity intact while still using modern tools.

1. Check the rules before touching AI

Basic but often skipped. For each course:

- Read the syllabus section on AI, chatbots, or “external tools.”

- Check assignment instructions for any AI notes.

- If in doubt, send a short email or ask after class. Be specific: “Can I use AI for grammar only?”

If your professor is vague, ask for concrete examples of allowed vs disallowed uses. That conversation alone can set you apart as someone who cares about doing it right.

2. Always do a “first pass” without AI

Before you ask AI anything, spend some time alone with the task.

For reading:

- Skim the article yourself.

- Write a quick summary in your own words.

- List questions you genuinely have.

For problems:

- Attempt the problem on your own, even if you get stuck.

- Circle the exact step where you got blocked.

Only then:

- Ask AI to explain the part you are stuck on.

- Compare, not copy, solutions.

This pattern trains you to name your confusion, which is a core academic skill.

3. Keep a simple AI usage log

Open a small document or notebook section where you record:

- Date

- Assignment or course

- What you asked AI to help with

- How you changed or used the output

Example entry:

“Oct 3, History 220 essay draft. Asked AI to suggest a clearer transition between paragraphs 2 and 3. Took one sentence structure idea, rephrased it myself. No content generation.”

Benefits:

- You stay conscious of how far you are going.

- You have evidence if a question about AI usage ever comes up.

- You can review your habits and see patterns you want to change.

4. Never copy AI output verbatim on graded work without permission

If you treat AI text as a draft, you should rewrite it heavily:

- Change structure and order.

- Insert your own examples from class or life.

- Adjust the tone to match your real voice.

- Cross-check every factual claim and citation.

If a course explicitly allows pasting AI text with disclosure, follow the exact rules your professor sets. Some will ask you to quote AI like a source. Others will ask you to mark sections with comments.

5. Cross-check everything factual

AI models are confident but not reliable sources. They can:

- Invent articles and authors.

- Misstate dates, names, or numbers.

- Mix together multiple theories or frameworks incorrectly.

Safer workflow:

- Use AI to generate hypotheses or rough outlines of arguments.

- Verify each claim using textbooks, lectures, or peer-reviewed sources.

- Only cite sources you have actually checked yourself.

Treat AI as a brainstorming partner, not a bibliography.

6. Use AI to practice thinking, not to skip it

Some of the strongest uses of AI are actually for self-testing:

- After writing a solution, ask AI to critique it and propose counterarguments.

- Explain a concept to AI as if it were a first-year student, then ask it to point out missing pieces.

- Ask AI to generate quiz questions on a topic you just studied, then answer them without help.

This flips the relationship: AI becomes the person asking you hard questions, not the one answering yours.

Where this is heading for students building careers

If you are ambitious about research, startups, or any field where serious thinking matters, your relationship with AI will follow you beyond campus.

1. Grad school and research labs

Supervisors are already asking:

- Can this student read complex material and extract the core idea?

- Do they understand methods at a level deeper than “asked AI for code”?

- Can they reason formally, argue clearly, and spot flaws?

Responsible AI use can help you:

- Scan more papers, faster, then choose what to read in depth.

- Draft proposals or abstracts quicker, leaving more time for design and execution.

- Automate boring parts of data cleaning so you can focus on analysis.

Irresponsible AI use will show up when:

- You cannot defend your work at a conference.

- Your code or methods fall apart when someone tweaks constraints.

- You miscite or misrepresent prior work because you trusted auto-generated references.

2. Startups and student ventures

If you are building something on campus, AI can be a serious multiplier:

- Quick mockups of landing pages and marketing copy.

- Market research summaries from messy sources you already collected.

- Rapid prototyping of simple apps or data analysis.

But it can also tempt you into skipping key work:

- Copying generic strategies instead of talking to real users.

- Building features that an AI suggested without aligning them to any real need.

- Shipping products that you cannot maintain without AI rewriting them each time.

Here the same rule applies: tools are great, but only if you still understand what you are building and why.

3. Long-term credibility as someone who knows their stuff

Eventually, degrees matter less than what you can actually do. When AI is everywhere, the signal moves from “who can access tools” to “who can think rigorously with and without tools.”

Habits that will matter:

- Being explicit about your methods and sources.

- Showing your process instead of just your polished output.

- Balancing speed with depth instead of always picking the shortest path.

The question is not “Will AI make students obsolete?” The question is “Which students will learn to work with AI in a way that makes them more capable, not less?”

If you treat AI as a cheating tool, it will quietly shrink your competence. If you treat it like a demanding research assistant that helps you think, question, and build, it can raise your ceiling. The hard part is being honest with yourself about which version you are choosing each time you open a new chat window.